In my post Friends don’t let friends run containers as root, I took a simplified view that Kubernetes does not add any security policies.

I went for this simplified statement because, when I wrote the post, Kubernetes 1.22 was moving away from Pod Security Policies, and there had not been any replacement in a released version.

Beginning with Kubernetes 1.23, Pod Security Standards are introduced to replace Pod Security Policies.

Kubernetes administrators can choose between the policy profiles privileged, baseline, and restricted.

The policies are cumulative, which means that baseline contains all the rules from privileged, and restricted has all rules from privileged and from baseline.

| Profile |

Description |

| Privileged |

Unrestricted policy, providing the broadest possible level of permissions. This policy allows for known privilege escalations. |

| Baseline |

Minimally restrictive policy, which prevents known privilege escalations. Permits the default (minimally specified) Pod configuration. |

| Restricted |

Heavily restricted policy, following current Pod hardening best practices. |

See Kubernetes - Pod Security Standards for more details.

Note: On the Kubernetes website, the words policy profile and level are used interchangeably. The term policy profile is used for a set of policies. Whereas the term level is used when Pod Security Standards are enabled. I am going to follow this pattern in this post as well.

Select the Security Profile to Prevent Pods Running as Root

To prevent any Pod from running as root, we have to select the profile restricted. Restricted requires the fields

spec.securityContext.runAsUserspec.containers[*].securityContext.runAsUserspec.initContainers[*].securityContext.runAsUserspec.ephemeralContainers[*].securityContext.runAsUser

to be either null or set to a non-zero value.

This is precisely what we want.

Restricted has more rules you need to comply with.

Please review the list of policies included in the restricted profile to get a better understanding of the other rules.

As you can see, even with the profile restricted, the requirement is that you cannot run the container with user ID 0.

This means that you could run the pod with user IDs 1, 500, or 1827632. And, if you set the runAsUser to null, then the user ID defined in the image will be used. If that is the root user, you will get an error message like “Error: container has runAsNonRoot and image will run as root”.

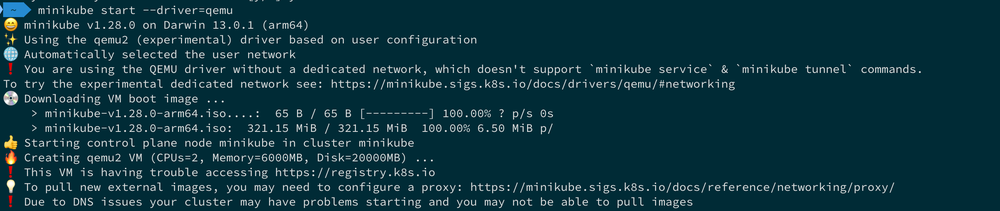

Enabling Pod Security Standards

Pod Security Standards are a somewhat optional feature.

You need to configure them per namespace by applying a label to the namespace.

The label defines which policy profile should be used and what Kubernetes does with a Pod if a policy violation has been detected. The mode can be one of the following:

| Mode |

Description |

| enforce |

Policy violations will cause the pod to be rejected. |

| audit |

Policy violations will trigger the addition of an audit annotation to the event recorded in the audit log but are otherwise allowed. |

| warn |

Policy violations will trigger a user-facing warning but are otherwise allowed. |

The label you apply to the namespace takes the form pod-security.kubernetes.io/<MODE>: <LEVEL>.

To require all pods to comply with baseline level, you need to apply the label pod-security.kubernetes.io/enforce: baseline.

If you want to inform the users and write the violation to the audit log, you can also add labels for this.

In the end, you end up with the following namespace manifest:

1

2

3

4

5

6

7

8

|

apiVersion: v1

kind: Namespace

metadata:

name: default

labels:

pod-security.kubernetes.io/enforce: baseline

pod-security.kubernetes.io/audit: baseline

pod-security.kubernetes.io/warn: baseline

|

Conclusion

The Pod Security Standard is an excellent next step after Kubernetes deprecated Pod Security Policies in Kubernetes v1.21.

Currently, the feature is in the beta stage, and an administrator has to configure it per namespace.

Once you begin using profiles baseline and restricted, you have an effective tool to make the cluster more secure.

The recommendation I give in my post Friends don’t let friends run containers as root changes only slightly for Kubernetes:

- Kubernetes:

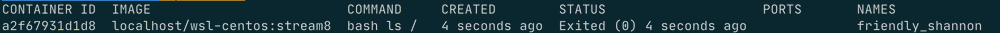

- For running a plain container in Kubernetes, you don’t have to do anything. Kubernetes starts the container with the user ID set by the USER instruction in the Dockerfile.

- The Deployment manifest might need additional configurations depending on the selected Pod Security Standard profile.